Research Program

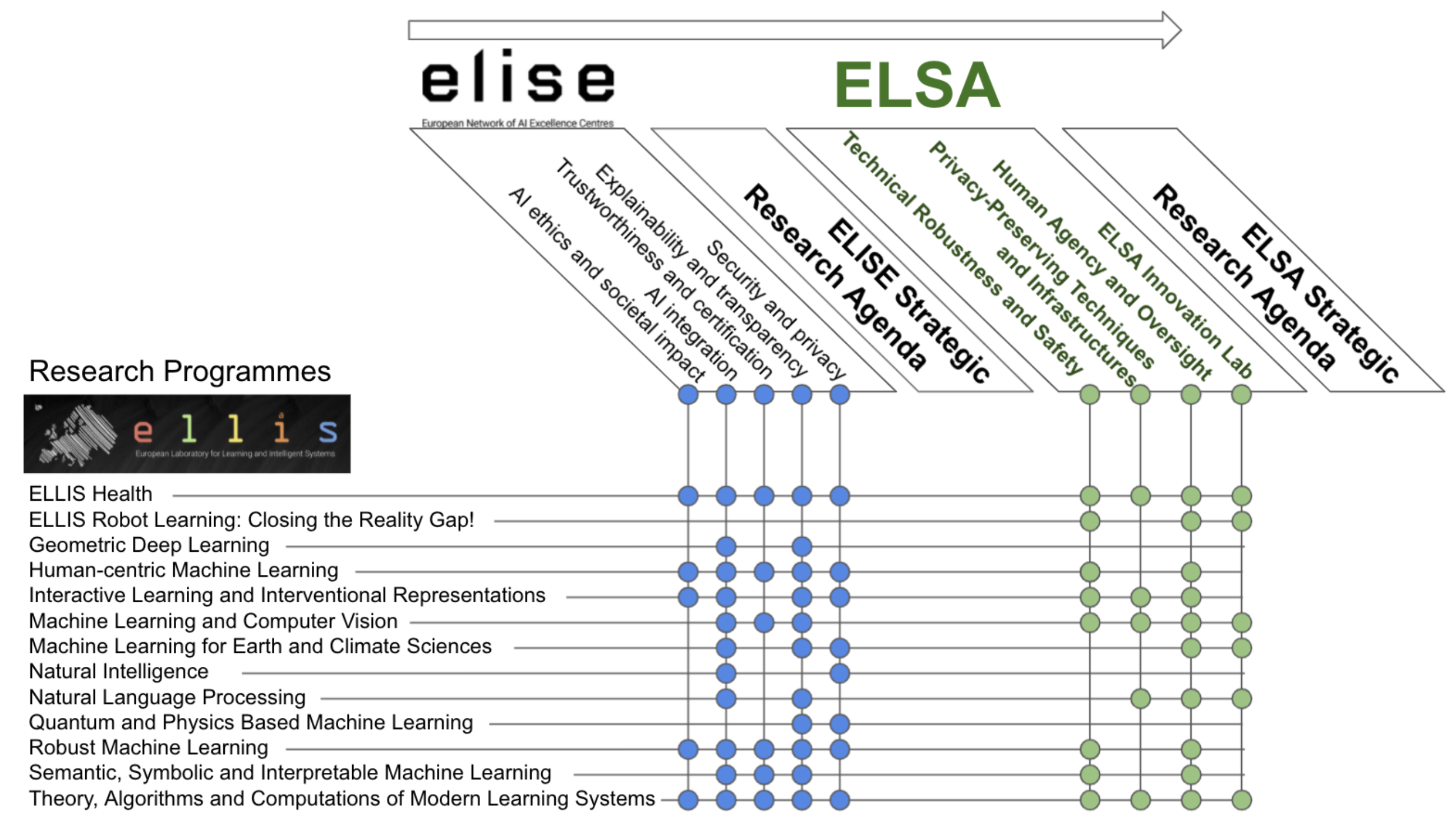

We will build on the published ELISE research agenda that laid a broad foundation towards “Advances in foundations of AI and approaches for trusted AI solutions”. We will refine this research agenda in the following w.r.t. aspects of safe and secure AI. Throughout the project, the strategic research agenda and associated grand challenges that hamper deployment will evolve together with stakeholders and a particular focus on use cases. In the following, we will describe the ELSA Research Programmes.

Technical robustness and safety

Data-driven AI systems based on deep learning models have recently recorded unprecedented success in many different domains, including visual perception, natural language processing, speech recognition, computer vision and autonomous system planning. However, it is becoming increasingly evident that current deep learning systems suffer from several fundamental issues, including a lack of robustness guarantees, minimal resilience against input data perturbation, and a reinforcing effect on biases present in data (Biggio and Roli, 2018). Such fragility could preclude a broad adoption in crucial domains where AI can bring substantial benefits to society, such as mobility, healthcare, and cybersecurity. To lift this roadblock, we need new methods for creating safe, robust, and resilient AI systems with (statistically) provable robustness, under specific threat models that allow simulating feasible and practical attacks for the applications at hand. Developing such methods, however, is fundamentally challenging not only due to the complex nature of deep neural models, which require precise reasoning about millions of parameters interacting in a non-linear manner, but also for the non-trivial nature and variety of input data manipulation strategies that can be envisioned across different application domains (Demetrio et al., 2021; Fischer et al., 2020). While current certification approaches can handle small networks combined with simple robustness properties, i.e., under simplistic threat models of data manipulation, scaling to more complex natural robustness and safety properties, more complex networks, or simulator-in-the-loop settings, remain challenging open problems. Another prominent challenge for enabling AI systems to reliably support and make decisions is to develop approaches towards accurately quantifying epistemic and aleatoric uncertainty, respectively due to lack of data or, e.g., noisy observations or actions. Relevant research challenges include scaling up Bayesian approaches to deep learning, assessing uncertainty when transferring models across related tasks, reason about uncertainty in data-driven models informed by (e.g., physical) background knowledge (Michelmore et al., 2020; Konig et al., 2020). It is also necessary to appropriately take uncertainty into account in the decision-making process, e.g., for assessing and avoiding potential risks associated with different actions, or to use uncertainty for exploration and optimized information gathering.

Privacy-preserving techniques and infrastructure

Modern AI and deep learning technologies depend on massive amounts of data, often about individuals. These data can create benefits for individuals and society, but their misuse can create digital harms. These threats can be mitigated by limiting access to the raw data using encryption and learning with distributed data using technologies such as secure federated learning (Kairouz et al., 2021). Trained models can also leak sensitive information unless they are protected using technologies such as differential privacy (DP; Dwork et al., 2006). DP can provide provable guarantees against harm to data subjects, but models with strong guarantees often suffer from low utility and there is often a significant gap between formal guarantees and best known attacks, meaning models may be more private than we can formally prove (Nasr et al., 2021). Still, theoretically verified guarantees like DP are the only way to truly satisfy the anonymity requirements set in the GDPR when data or models are made publicly available online (Cohen and Nissim, 2020). Key challenges in privacy-preserving and distributed learning requiring significant investment in new fundamental research include closing the gap between theoretical upper bounds for privacy loss and observed actual privacy risks, developing federated and distributed learning methods that combine efficiency, security, privacy and robustness, as well as making the methods available in provably secure implementations that are openly available and interoperable. Methods for learning personalised models (e.g. Mauer et al., 2016) and developing incentives for participants (e.g. Biswas et al., 2021) will lead to greater opportunities for collaborative learning.

Human agency and oversight

Robust technical standards will not deliver safe and secure AI in Europe unless and until they are embedded within a legitimate and effective governance architectures that provide meaningful human oversight that is demonstrably in accordance with core European values: namely, respect for democracy, human rights (including the protection of safety and security) and the rule of law (EU, 2018; Congress, 2019). While EU policy-makers have produced several legal oversight regimes, including the GDPR, the EU Medical Device Regulation, and the recently proposed EU AI Act, whether the resulting governance architecture, and mechanisms through which compliance with these goals will be achieved and assured remains unclear, untested and unknown. Therefore, cross-disciplinary research including technical experts as well as legal and governance experts is vital for integration and embedding of technical methods within ethical and governance regimes that are designed to provide meaningful and evidence-based assurance (‘AI Assurance’) (Yeung et al, 2020). Transparency, explainability and interpretability (including “by design” rather than post hoc) of the technical AI solutions plays a critically important role in understanding and verification of the reasoning and decision making processes which such AI systems follow (Babic et al., 2021; Rudin, 2019). The Grand Challenge is to devise adequate, integrated governance frameworks, methods and mechanisms that enable meaningful human oversight aligned with European values (BEUC, 2021) as well as to enable widespread take-up and deployment of AI applications that could significantly enhance individua l and collective well-being.